Overview

Looped (recurrent) Transformers are an efficient alternative to deep non-shared stacks, but in practice they are almost always trained and evaluated with a fixed number of unrolls. As a result, performance often collapses when running at shorter or longer depths—making looped models far less compute-elastic than they should be.

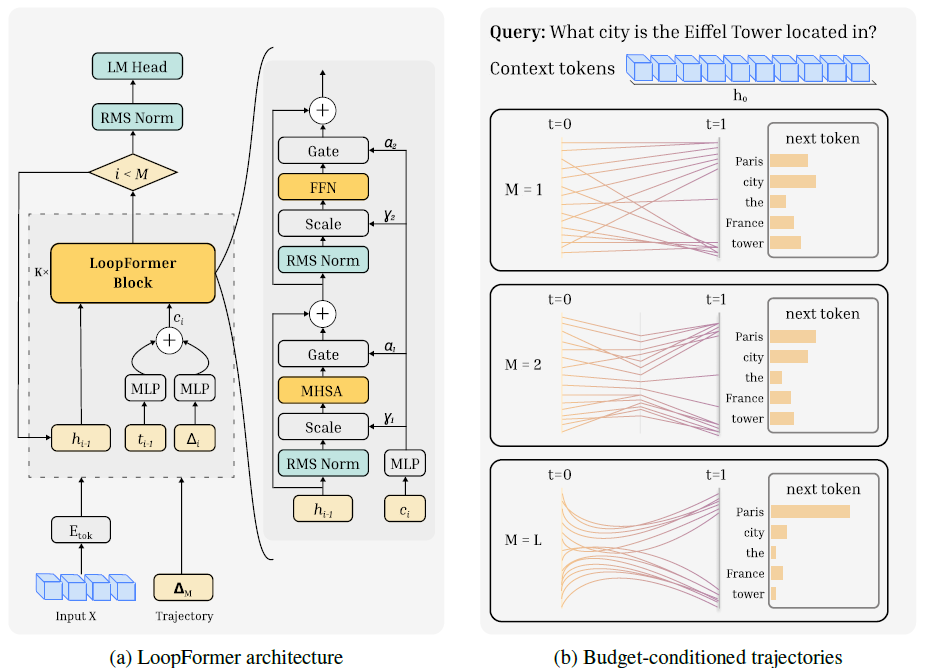

We present LoopFormer, a shortcut-modulated looped language model for budget-conditioned inference. LoopFormer treats iterative refinement as a trajectory in representation space and conditions each loop step on internal time t and step size Δt (“jumps”), so coarser schedules can approximate fine-grained ones with fewer steps.

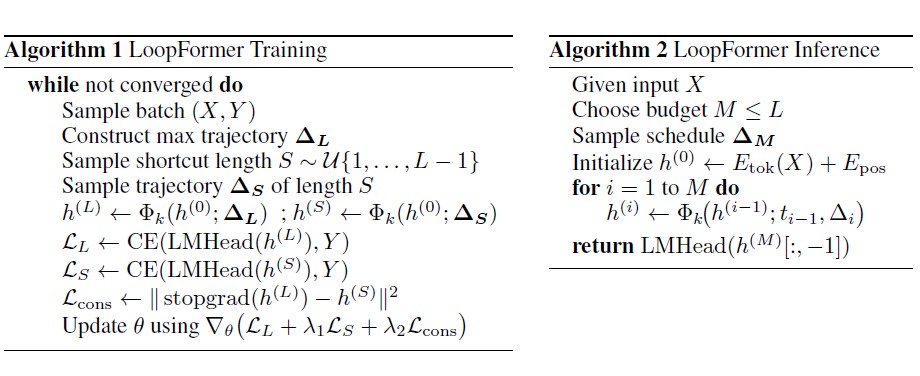

Training uses a shortcut-consistency objective that aligns shorter routes to the full-route final representations (self-distillation within the loop). At inference, users choose a budget M ≤ L and a step schedule, and LoopFormer scales smoothly with compute—without retraining or late-step stagnation. The following shows the train and inference algorithms:

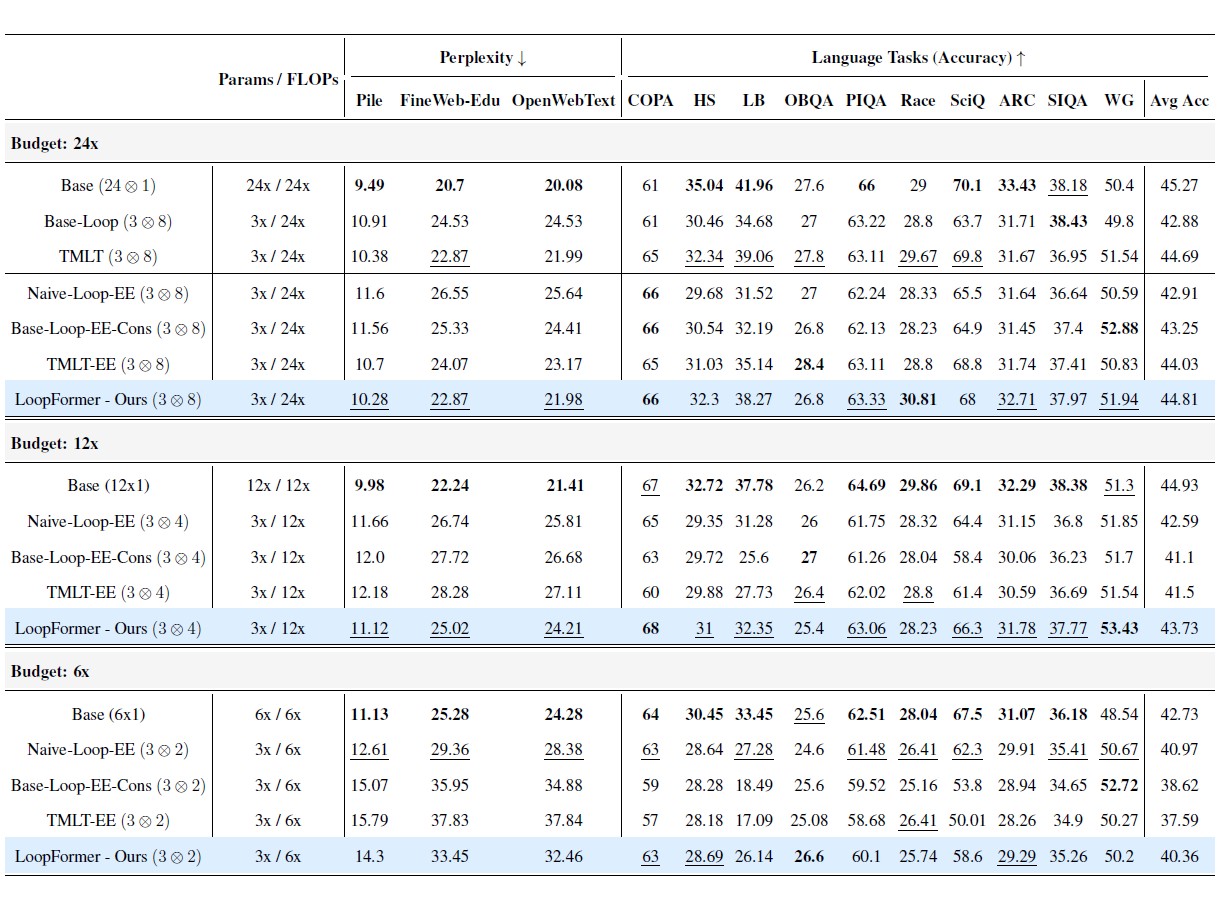

Main Results

The table below compares LoopFormer against a range of baselines, including vanilla (non-looped) Transformers, fixed-depth looped Transformers, and adaptive early-exit methods.

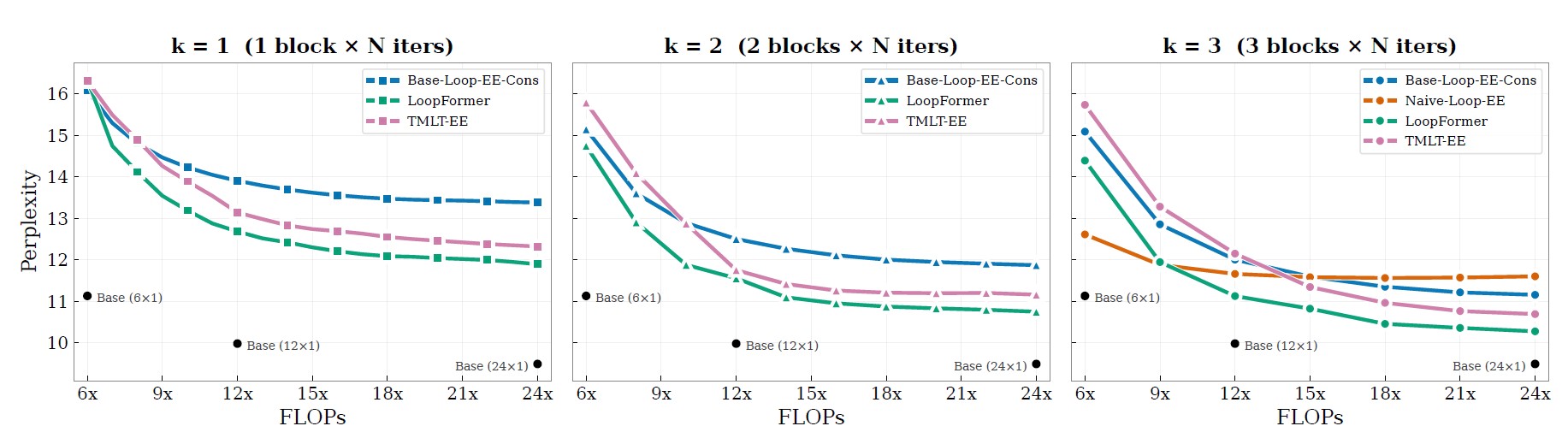

Layers vs. Loops

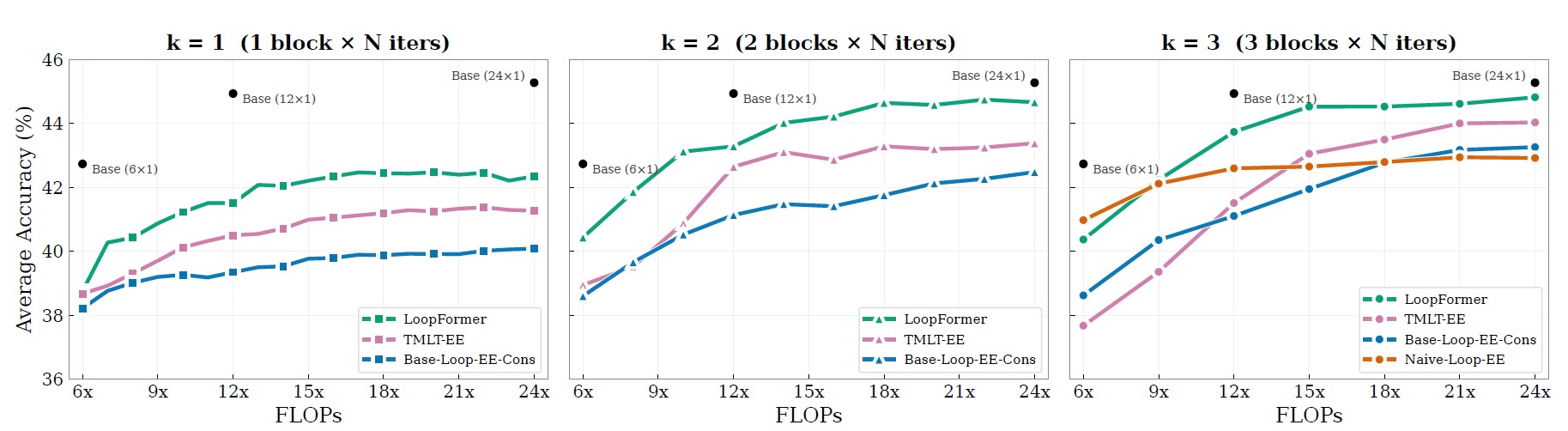

We compare LoopFormer with other depth-elastic baselines by varying the number of blocks and loop steps under similar compute budgets. The figure below reports perplexity across these settings.

The next figure compares zero-shot accuracy across 10 language reasoning benchmarks for the same compute-matched configurations.

Trajectories

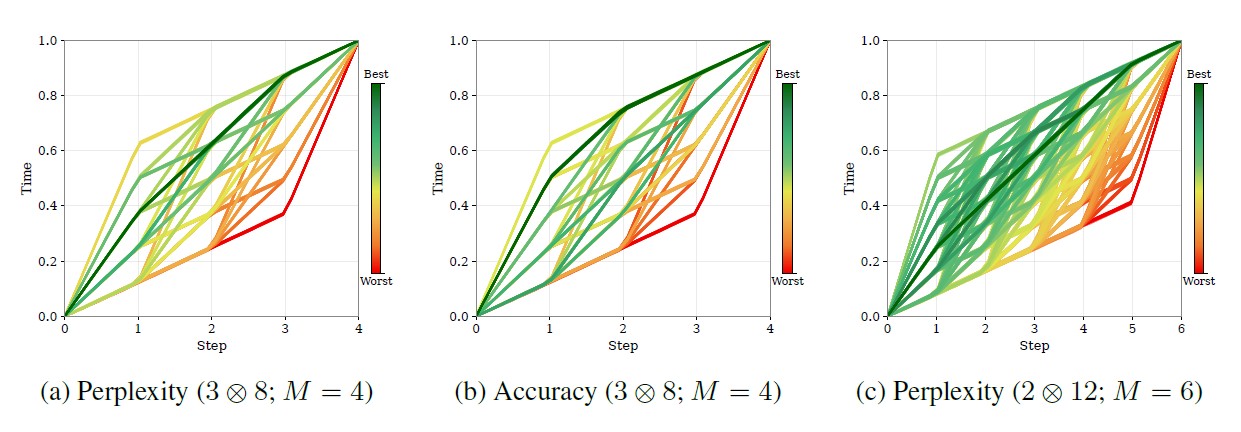

A key idea in LoopFormer is viewing iterative refinement as a trajectory in representation space. Different time/step schedules (“trajectories”) exhibit distinct trade-offs: the trajectory that minimizes perplexity is not always the one that maximizes reasoning accuracy. The figure below compares these behaviors across a range of trajectories.

BibTeX

@misc{jeddi2026loopformerelasticdepthloopedtransformers,

title={LoopFormer: Elastic-Depth Looped Transformers for Latent Reasoning via Shortcut Modulation},

author={Ahmadreza Jeddi and Marco Ciccone and Babak Taati},

year={2026},

eprint={2602.11451},

archivePrefix={arXiv},

primaryClass={cs.CL},

url={https://arxiv.org/abs/2602.11451},

}